Designing a Scalable Data Warehouse Architecture: Best Practices and Examples

Mitra P

2025-03-30

Talk to our cloud experts

Subject tags

Designing a scalable data warehouse architecture is crucial for organizations that aim to manage and analyze large volumes of data effectively. A data warehouse acts as a centralized repository that integrates data from various sources, both internal and external. It stores the data in a way that allows for complex queries and business intelligence (BI) reporting. As businesses continue to generate vast amounts of data, the ability to scale your data warehouse to handle this increasing load is more important than ever.

To ensure your data warehouse can grow with your business needs, you must design an architecture that is flexible, high-performing, and capable of managing current and future data demands.

In this article, we’ll explore best practices and real-world examples that can guide you in building a robust and scalable data warehouse architecture.

What Is a Data Warehouse?

A data warehouse is a centralized repository that stores data from various sources, making it easier for businesses to perform complex queries and analyses. It serves as a single source of truth, integrating and organizing data from different departments, such as sales, marketing, or finance, for better decision-making.

The primary goal of a data warehouse is to turn raw data into valuable insights. This transformation happens through data extraction, cleaning, and structuring processes. Once the data is properly organized, it can be analyzed to reveal trends, patterns, and opportunities that drive business growth.

When you design a data warehouse, you’re essentially creating a system that collects, stores, and manages data in ways that empower businesses to make smarter, data-driven decisions.

Now that we clearly understand a data warehouse, the next step is to dive into the critical process of understanding business requirements to ensure that your data warehouse aligns with your organization’s specific needs.

Understanding Business Requirements

Aligning the architecture with business goals is crucial when designing a data warehouse. A data warehouse is not just a storage tool; it should serve as a foundation for decision-making and strategy. Without a clear understanding of business objectives, the data warehouse risks becoming a bottleneck rather than an asset.

Identifying and documenting business needs is the first step in designing a data warehouse that adds value. Start by asking key questions such as: What specific metrics or KPIs does the business need to track? What are the most critical decisions that will depend on the data stored in the warehouse? Answering these questions ensures that the data warehouse serves the business’s needs and provides relevant insights. This clarity will guide the design, ensuring the data warehouse is tailored to support the organization’s strategic objectives.

Now that we have discussed the importance of business alignment, let’s examine the issue of identifying and defining data sources.

Identifying and Defining Data Sources

Defining your data sources is crucial before designing a data warehouse. Integrating data becomes a challenge without clearly understanding where it comes from. The right data sources are the foundation for accurate and actionable insights.

Start by determining which data sources are essential for your business needs. These could range from internal sources like CRM, databases, and ERP systems to external sources like social media or third-party APIs. Each source has its characteristics—some may be structured, while others might be unstructured or semi-structured. Assessing these characteristics is important to ensure smooth integration and compatibility.

The next step is to assess the data quality, volume, and variety. High-quality data is critical for reliable analysis, so ensure that your data is accurate, consistent, and clean. Furthermore, consider the volume and variety of data you must handle. Will your business generate large amounts of data over time? Does the data come in different formats or require transformation before use? Knowing this will help you design a data warehouse that can scale and meet future demands.

Lastly, always consider security and privacy. Sensitive data, such as customer information, needs to be protected. Understanding how to secure data from various sources and comply with privacy regulations is crucial when designing a data warehouse. Ensure that data protection measures are built into the architecture from the start.

With your data sources identified and understood, the next step is choosing the right data warehouse architecture to support your business needs.

Choosing the Right Data Warehouse Architecture

When selecting the right data warehouse architecture, it’s important to understand the different types available. The architecture defines how data is stored, processed, and accessed. There are three common architectures: single-tier, two-tier, and three-tier.

- Single-tier architecture is simple, where the data is stored in one layer. It’s suitable for smaller systems but lacks scalability.

- The two-tier architecture separates the data storage from the data access layer, improving performance. It is often used when data volume and complexity increase.

- Three-tier architecture adds a layer between data storage and the user interface, enhancing scalability and security. Larger systems with high data complexity often use it.

You must evaluate data volume and integration complexity when designing a data warehouse. If your business generates vast amounts of data, you’ll need an architecture that can handle heavy processing loads and large datasets. Similarly, if data comes from numerous complex sources, you’ll need an architecture that seamlessly integrates and manages this variety.

Consider real-time data needs and scalability. Some industries, like finance or retail, require up-to-the-minute updates, while others might focus on batch processing. Your architecture should support this demand, ensuring data can be processed in real time or at scheduled intervals. Scalability is equally important. As your business grows, your data warehouse should grow with it, handling more data, more users, and more complex queries without compromising performance.

Lastly, selecting an architecture that supports flexibility and growth is essential. A well-designed data warehouse architecture must meet current needs and be adaptable to future changes. This flexibility ensures your system can evolve as new data sources, technologies, or business needs emerge.

Having established the architecture, the next vital step is creating a data model that aligns with your business objectives and requirements.

Designing the Data Model

Data modeling is at the core of a well-structured data warehouse. It defines how data is organized, stored, and accessed, ensuring it serves decision-makers' needs. A strong data model simplifies information retrieval and analysis, directly impacting business outcomes.

When designing a data warehouse, choosing the right data model is crucial. Star schema and Snowflake schema are two popular approaches. The Star schema is simpler and more efficient for querying, with fact tables connected to dimension tables. It’s often favored for its straightforwardness. On the other hand, the Snowflake schema normalizes the data, leading to a more complex structure but greater data integrity and minimizing redundancy. Depending on your data complexity, both models offer advantages in different scenarios.

Any data model must ensure data quality and consistency. Consistency across all data sources provides the warehouse’s integrity, making it easier to trust and analyze the data. Data quality checks should be integrated into the design process, helping prevent issues that could impact decision-making later.

Finally, integration and business logic considerations are vital. Your data model must align with your organization's business logic. This could involve integrating with external systems, applying specific business rules, or supporting the calculations for financial reports or marketing campaigns. These factors ensure the data warehouse stores data and provide actionable insights.

Once the data model is in place, it's time to plan the ETL processes that will bring the data to your warehouse.

Planning for ETL Processes

ETL (Extract, Transform, Load) is a data warehouse's backbone of data processing. It involves extracting data from different sources, transforming it into a usable format, and loading it into the warehouse. Before you design a data warehouse, defining your ETL requirements is essential. This includes determining the frequency of data updates, the type of data processing needed, and how to handle errors or inconsistencies during the process. A clear ETL strategy helps maintain data integrity and ensures the system functions as expected.

Choosing Between Batch Processing and Real-Time Processing

When considering ETL, you’ll choose between batch processing and real-time processing. Batch processing is used when data is updated in bulk at scheduled intervals. Manufacturing industries often use batch processing where timely data is less critical. In contrast, real-time processing is essential for applications that require immediate updates, such as e-commerce platforms or financial services. Real-time processing constantly refreshes the data, allowing businesses to act on the latest information.

Tips for Effective ETL Planning

Effective ETL planning is key to a successful data warehouse. One tip is to prioritize data quality at each stage of the process. Ensuring data is cleaned and validated before loading will save time and reduce errors later. Another tip is to scale your ETL processes to match business growth. As your data volumes increase, your ETL system should be able to handle the load without slowing down performance. Using automated ETL tools can help streamline the process, reducing manual errors and boosting efficiency.

After setting up your ETL processes, the next essential step is establishing security measures and access controls to safeguard the valuable data you've collected and structured.

Implementing Security and Access Controls

Securing your data warehouse is critical. The first step is identifying your business’s security and access control requirements. Consider your industry’s risk level and data sensitivity. For example, financial institutions need tighter access control and stronger encryption than retail businesses handling non-sensitive customer data. A detailed risk assessment can help you pinpoint what data requires protection and who should have access.

Once you understand the needs, the next step is implementing user authentication and role-based access control (RBAC). Authentication verifies that users are who they claim to be, often using multi-factor authentication (MFA) for added security. With RBAC, you can restrict access based on roles within the company, ensuring users only see data relevant to their responsibilities.

Equally crucial is data protection. Ensure compliance with industry regulations like GDPR, HIPAA, and CCPA. Incorporate encryption (both in transit and at rest) to secure sensitive data from unauthorized access. Regular security audits and access reviews are essential to identify vulnerabilities and ensure ongoing compliance.

Now that we have robust security measures, let’s examine best practices for creating a scalable and efficient data warehouse that will support your business’s long-term goals.

Best Practices in Data Warehouse Design

When designing an effective data warehouse, key strategies ensure scalability, flexibility, and efficient management. Following these best practices will streamline the design process and enhance the long-term effectiveness of your data warehouse.

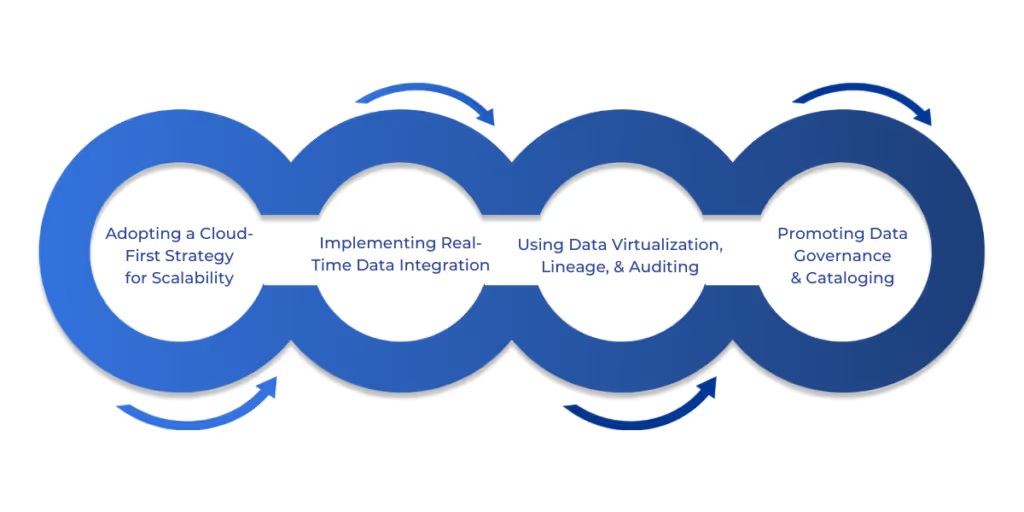

Adopting a Cloud-First Strategy for Scalability

A cloud-first strategy is crucial for businesses that expect rapid growth or need flexibility. Cloud platforms like Microsoft Azure or Google Cloud offer on-demand scalability, meaning you only pay for what you use. This is especially beneficial when the data volume increases unexpectedly. Adopting this strategy allows your data warehouse to scale up or down seamlessly as business needs evolve.

Implementing Real-Time Data Integration

Real-time data integration enables businesses to work with up-to-the-minute data. This is essential for staying competitive in industries like retail or financial services. Real-time data allows decision-makers to act quickly, whether adjusting inventory in response to consumer demand or analyzing stock market fluctuations in real-time. The key is to design a system that supports continuous data flow without causing delays or disruptions. This often involves integrating streaming technologies and setting up automated data pipelines to ensure data is available as soon as it’s generated.

Using Data Virtualization, Lineage, and Auditing

Data virtualization allows users to access data without storing it physically in the data warehouse. This reduces the need for additional storage, improves access speed, and ensures users can query data from various sources without duplicating it. Data lineage tracks where data originates, how it moves, and where it’s stored. This visibility helps teams identify data issues, improve quality, and ensure compliance. Additionally, auditing allows you to monitor data activity for security and compliance purposes, ensuring data integrity and reducing the risk of errors or fraud.

Promoting Data Governance and Cataloging

Good data governance is a foundation for a successful data warehouse. It ensures that data is consistent, accurate, and accessible to the right people. Establishing clear data ownership, quality standards, and security protocols helps prevent misuse or data quality issues. Additionally, implementing a data catalog provides a clear structure for organizing and locating data within the warehouse. This allows users to easily find the data they need without confusion, making it easier to derive valuable insights.

Conclusion

Designing a scalable data warehouse requires thoughtful planning and execution. The journey begins with clearly understanding your business objectives and aligning the architecture accordingly. A cloud-first strategy, real-time integration, and strong governance practices are essential to ensuring long-term success.

But a data warehouse is never truly "finished." Continuous monitoring and improvements are key to keeping your system responsive as your data needs evolve. Data growth, new technologies, and business changes will continue to shape the architecture. You ensure your system can scale seamlessly with the business’s demands by staying ahead with regular updates and optimizations.

At WaferWire, we understand the complexities of designing and implementing a data warehouse that meets your business's current needs and is adaptable for the future. Our end-to-end solutions, from strategy to implementation, are tailored to support enterprises, mid-market companies, and SMBs in creating powerful, scalable data systems.

Contact us today to begin your digital transformation journey, ensuring your business stays ahead of the curve.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)