Steps to Develop Robust Data Pipelines from Scratch

Mitra P

2025-02-28

Talk to our cloud experts

Subject tags

Getting the right data at the right time is essential for any company that wants to stay ahead of the competition. However, developing robust data pipelines isn’t as easy as flipping a switch. Each stage requires careful thought and precision, from the initial setup and ensuring smooth data ingestion to transforming and securely storing it for easy access.

This article will guide you through the critical steps of building a data pipeline from scratch. But we won’t stop there. We understand the complexities involved in pipeline development, which is why we’ll also present a streamlined framework to help simplify the process. With these insights, you’ll be equipped to create a scalable, efficient pipeline that ensures your data is always at your fingertips, ready to power your business forward.

What is a Data Pipeline?

A data pipeline is a systematic process that handles data’s movement, transformation, and analysis. It allows data to flow seamlessly from its source to where it’s needed, making it actionable and useful for decision-makers. Whether it’s collecting data from various systems, cleaning it, or presenting it in an easy-to-understand format, data pipelines play a crucial role in transforming raw data into valuable insights.

The purpose of a data pipeline is simple: ensure data is accessible and actionable for end-users. Without a reliable pipeline, data can become siloed, unreliable, or unusable. By creating efficient pipelines, businesses can ensure that the right people have the right information at the right time, driving smarter decisions and faster business outcomes.

Having grasped the concept of data pipelines, let’s examine the importance of creating strong data pipelines for businesses that aim to remain competitive and leverage data for informed decision-making.

Why Develop Robust Data Pipelines?

In business, information is power, but only if it’s organized, processed, and delivered efficiently. Developing robust data pipelines ensures that data remains actionable and ready for insights. A streamlined pipeline allows for the quick extraction of valuable insights directly impacting decision-making and analytics.

Data-driven decisions depend on timely and accurate information. Without a reliable pipeline, data processing slows down, increasing the risk of errors. This creates data bottlenecks, delaying access to critical insights and impacting everything from customer satisfaction to business growth. A well-designed pipeline removes these barriers, ensuring the smooth flow of data and providing timely access to the right information.

With an optimized pipeline, your organization can act faster on data insights. As a result, you can make quicker, more informed decisions, adapt more agilely to market shifts, and enhance overall efficiency in every department.

Let’s now dive into the essential components of a data pipeline and how each piece contributes to a smooth, efficient data flow.

Essential Components of a Data Pipeline

When developing robust data pipelines, each component is critical in ensuring data flows efficiently and remains valuable for decision-making. Here’s a breakdown of the essential building blocks of any effective data pipeline:

1. Data Ingestion

The first step is importing data from various sources, such as databases, APIs, or flat files. Efficient data ingestion is crucial for ensuring that no valuable data is left behind and that it reaches the pipeline in real-time or batch processes, depending on the business’s needs.

2. Data Processing

Once the data is ingested, it must be cleaned, transformed, and formatted. This step is vital for ensuring the data is consistent, accurate, and ready for use. Data processing prepares data for analysis by filtering out noise, correcting errors, and enriching it with additional information when necessary.

3. Data Storage

The processed data needs to be stored for further analysis or querying. Depending on the business requirements, data storage can be handled through data warehouses or data lakes. A data warehouse handles structured, relational data, while a data lake handles unstructured or semi-structured data.

4. Data Consumption

Finally, the data must be made accessible to users. Whether through APIs, dashboards, or direct queries, users can interact with the data through data consumption. This step ensures that the insights drawn from the data are actionable for internal analysis or customer-facing applications.

Considering these core components, let’s move on to the practical steps involved in developing robust data pipelines from scratch. First, we will define our objectives and identify our data sources.

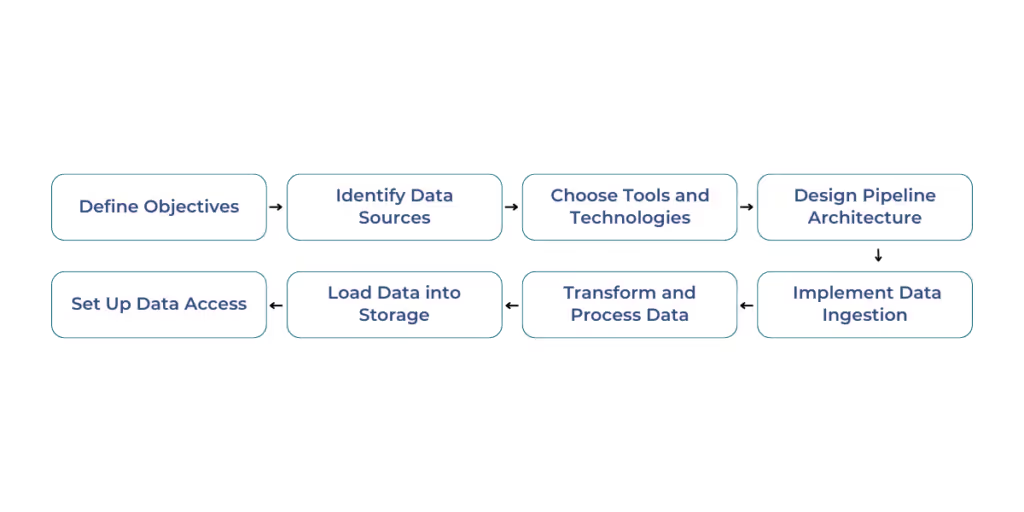

Steps to Develop Data Pipelines from Scratch

Each step serves a unique purpose in transforming raw data into actionable insights. Here's how to build your pipeline, step by step:

Step 1: Define Objectives

Before you start, clearly define your data objectives. Understand the business demands and user needs. This will steer the entire pipeline design and ensure the data is directed toward specific, valuable insights.

Step 2: Identify Data Sources

Determine where your data will come from. Will you be pulling it from databases, APIs, or files? Identifying these sources ensures the data pipeline is set up to access all necessary data points, making the process smooth and efficient.

Step 3: Choose Tools and Technologies

Opt for the right tools for your pipeline. Platforms like Apache Airflow are popular choices for scheduling and automating workflows. Select tools that fit your team's needs and scale as your pipeline grows.

Step 4: Design Pipeline Architecture

Plan your pipeline’s flow. You need to map out how the data will move from ingestion to processing and storage. This architecture will serve as the backbone of your data pipeline, ensuring each stage operates seamlessly.

Step 5: Implement Data Ingestion

Data needs to be ingested into the pipeline. Choose the methods you’ll use, whether it’s batch processing or real-time streaming. This step ensures that the data is consistently fed into your pipeline.

Step 6: Transform and Process Data

Cleaning, transforming, and enriching your data is crucial. This step helps remove noise and inaccuracies, ensuring the data is valuable for analysis. Processing can also involve enriching the data with additional context or combining data from multiple sources.

Step 7: Load Data into Storage

Decide how you will store the data once it’s processed. You might choose a data warehouse for structured data or a data lake for more varied types of data. This step ensures your data is stored securely and can be accessed when needed.

Step 8: Set Up Data Access

Finally, make sure that the data is easily accessible. Utilize APIs or dashboards to provide the right stakeholders with the ability to query or visualize the data.

Once you’ve built your data pipeline, the next critical phase is to ensure it runs efficiently. Let’s dive into how to monitor and maintain your pipeline to keep it running smoothly.

Monitoring and Maintaining Data Pipelines

Once you’ve set up your data pipeline, it is essential to ensure it runs smoothly and securely. Monitoring performance is the first step. Using monitoring tools, you can track how well your pipeline is performing, detect inefficiencies, and identify bottlenecks. Regularly checking the pipeline’s output ensures that data is processed quickly and accurately, helping you maintain high-quality results. For example, if you’re working with large volumes of real-time data, you’ll want to monitor both throughput and latency to ensure the pipeline keeps up.

Another key component is data security. It is crucial to ensure that sensitive data is properly handled. To protect data in transit and at rest, implement encryption at every stage of your pipeline. You should also set up access controls to restrict data access to authorized users.

Error Handling through Audit and Logging

Effective error handling is essential for maintaining the integrity of your data pipeline. Implementing thorough logging and audit processes enables you to track any issues that arise during data processing. When errors occur, logs help quickly pinpoint the problem, whether it’s a failed ingestion task, transformation error, or storage issue. Audit trails provide transparency, ensuring you can trace every action taken in the pipeline and resolve issues more efficiently. Reviewing logs regularly can proactively prevent recurring problems and ensure your pipeline remains reliable and consistent.

Now that we’ve discussed the significance of monitoring and securing your data pipeline, let’s examine how to scale and optimize it for improved performance and cost-effectiveness.

Scaling and Optimization

When developing robust data pipelines, scalability and optimization are critical to maintaining performance as your data grows. Your pipeline should be able to scale to handle increased data loads and optimize for efficiency as your business evolves.

Scale as Needed

As your data needs grow, so must your pipeline. Consider horizontal scaling, where you add more machines to distribute the load, or vertical scaling, where you increase the capacity of your existing infrastructure. Choosing the right type of scaling ensures that your pipeline can handle larger datasets without compromising performance. Microsoft Fabric simplifies this process by offering a unified platform supporting horizontal and vertical scaling. With its auto-scaling capabilities, Microsoft Fabric ensures that your data infrastructure expands seamlessly, maintaining high performance and reliability as your data volume increases.

Optimize for Cost-Efficiency

While performance is important, it’s equally crucial to optimize costs. Scaling and optimizing should balance budget constraints with performance needs. This means carefully selecting cloud services, storage solutions, and processing options that give you the best value without sacrificing speed or reliability.

After addressing scaling and cost optimization, the next step is implementing best practices to ensure your pipeline remains effective, efficient, and adaptable to changing business needs.

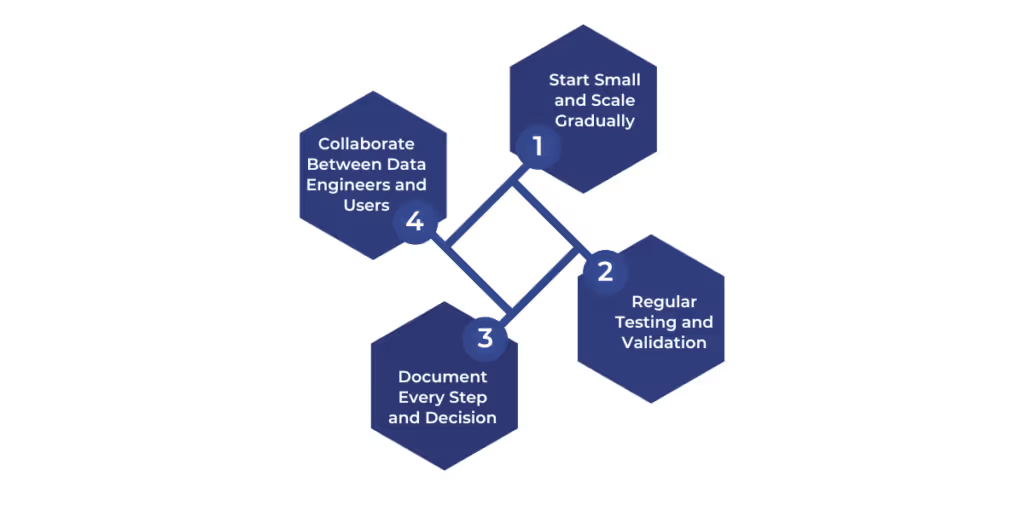

Best Practices

When developing robust data pipelines, adhering to best practices can help ensure your pipeline remains efficient, scalable, and reliable. These practices optimize data processing and prevent common pitfalls resulting in costly delays or errors.

- Start Small and Scale Gradually: It’s tempting to build a complex pipeline immediately, but starting with a simple, scalable solution is crucial. Focus on key data needs and expand the pipeline as you grow.

- Regular Testing and Validation: Your data pipeline should be tested regularly to ensure it’s processing data correctly. Use automated testing for data consistency, performance, and security. Regular validation checks will help ensure your pipeline works as expected, reducing the risk of errors.

- Document Every Step and Decision: Clear documentation of every step in the process, from data ingestion to final consumption, ensures that teams can easily understand and manage the pipeline. Documenting your decisions during the pipeline design phase is also essential for troubleshooting and future enhancements.

- Collaborate Between Data Engineers and Users: Ensure close collaboration between your data engineers and users. Engineers build the pipeline, but users interact with the data. Regular feedback from users will allow data engineers to adjust and fine-tune the pipeline, ensuring it serves the organization's needs.

Conclusion

Developing robust data pipelines is not merely a technical necessity; it’s a foundational strategy that drives business success. As organizations collect and utilize more data, a seamless and efficient pipeline becomes critical. A well-designed pipeline ensures smooth data flow and delivers actionable insights that improve decision-making. Without it, businesses may face data bottlenecks, delays, and unreliable analytics, which could impact their competitiveness.

However, establishing a pipeline is just the beginning. To truly harness its power, continuous optimization is key. As your business grows and technology evolves, so must your pipeline. An adaptable and scalable pipeline can seamlessly incorporate new tools, technologies, and data sources, ensuring your data infrastructure keeps pace with your needs.

At WaferWire, we specialize in developing robust data pipelines that grow with your business. Our solutions are efficient, secure, and scalable, designed to keep your data flow uninterrupted and your business competitive. Whether you’re an enterprise or mid-market company, we provide the strategy, tools, and support you need to enhance your data infrastructure. Ready to elevate your data capabilities? Contact us today.

BLOG LINK

https://waferwire.com/blog/understanding-data-lakehouse/

https://waferwire.com/blog/cost-effective-data-management-techniques-tools/

https://waferwire.com/blog/key-principles-scalable-architecture/

https://waferwire.com/blog/modern-data-pipeline-design/

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)