Fabric Data Integration Best Practices Guide

Mownika R.

2025-05-22

Talk to our cloud experts

Subject tags

Tired of your organization's valuable data being locked away in isolated systems, hindering your growth and insights? Did you know businesses with integrated data are 23 times more likely to acquire new customers? This is where the transformative capabilities of data integration become essential, and Microsoft Fabric emerges as a groundbreaking platform designed to orchestrate this vital process.

We'll explore Fabric's indispensable role in unifying your data, the core components that make this possible, and the tangible benefits of transforming fragmented information into actionable intelligence for unprecedented success.

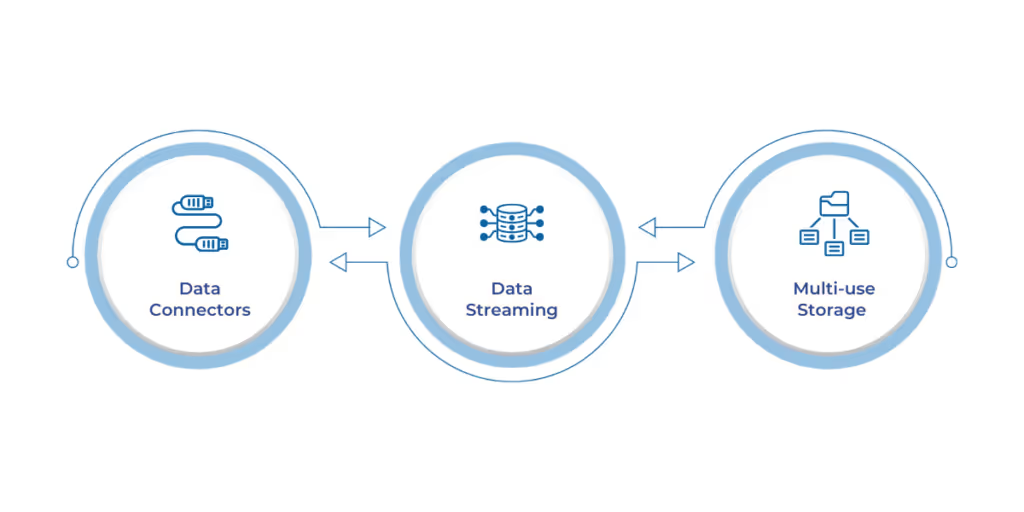

Core Components of Fabric for Seamless Data Integration

Microsoft Fabric offers a comprehensive suite of tools engineered for effortless data connection, processing, and storage:

1. Data Connectors: Building Bridges Across Information Sources

Fabric boasts an extensive library of pre-built connectors that serve as pathways to a broad spectrum of data origins, whether in on-premises SQL systems, cloud storage like Azure Blob Storage, or popular third-party applications. This simplifies the complexities of manual data retrieval and ensures a fluid information flow.

2. Data Streaming: Capturing the Real-Time Pulse of Your Business

For organizations requiring immediate awareness of dynamic data, Fabric's data streaming functionalities enable the live processing of information from sources such as IoT devices, website activity, and social media platforms. This facilitates instant alerts, proactive interventions, and up-to-the-minute decision-making.

3. Versatile Data Storage Solutions: A Robust and Adaptable Foundation

Fabric leverages Azure's powerful storage capabilities, including the highly scalable and cost-effective Azure Data Lake Storage for handling vast quantities of structured and unstructured data, the dependable Azure SQL Database for relational data needs, and the globally distributed Azure Cosmos DB for NoSQL workloads demanding high availability and low latency. This provides the flexibility to store your data most suitably for your specific requirements.

Also Read: Getting Started with Data Analytics on Microsoft Fabric

Beyond simply connecting and storing data, Fabric provides powerful mechanisms to refine, structure, and enrich your information, turning raw data into valuable insights.

Transforming Raw Data into Actionable Insights: Fabric's Tools

Fabric provides powerful mechanisms to refine, structure, and enrich your data:

1. Azure Data Factory: Orchestrating Intelligent Data Pipelines

Within Fabric, Azure Data Factory offers a visual and code-centric environment for constructing resilient and scalable data integration and transformation pipelines. You can design intricate workflows to extract, load, and transform data from various sources, ensuring data accuracy and consistency.

2. Azure Databricks: Unleashing Advanced Analytics and Machine Learning Potential

Fabric seamlessly integrates with Azure Databricks for sophisticated data enrichment and applies advanced analytical techniques and machine learning algorithms. This empowers data scientists and engineers to develop predictive models, conduct complex analyses, and extract more profound, nuanced insights from your unified data.

Also Read: Microsoft Synapse vs Fabric: Comprehensive Comparison

Once your data is integrated and transformed, the next crucial step is making it accessible and understandable. Fabric simplifies the process of visualizing and disseminating your integrated data.

Visualizing and Sharing Knowledge: Fabric's Integration Platforms

Fabric simplifies the process of visualizing and disseminating your integrated data:

1. Power BI: Crafting Compelling Data Narratives

The seamless integration with Power BI allows direct connection to your processed data within Fabric, enabling the creation of interactive dashboards and insightful reports. Power BI's intuitive interface and rich visual capabilities empower business users to explore data, identify trends, and communicate findings effectively.

2. Azure Synapse Analytics: Unifying Data Warehousing and Big Data Capabilities

Azure Synapse Analytics within Fabric converges the strengths of enterprise data warehousing and big data processing. This enables the execution of complex queries across extensive datasets, the extraction of profound analytical insights, and the development of robust data models for comprehensive reporting and analysis.

Also Read: Data Integration from Multiple Sources: Steps and Tips

While Microsoft Fabric provides exceptional tools, maximizing its advantages requires adhering to essential practices that ensure successful and sustainable data integration.

Guiding Principles: Best Practices for Effective Data Integration

To maximize the advantages of Fabric data integration, consider these essential practices:

1. Defining Clear and Specific Objectives

Establish well-defined business goals before initiating any data integration project and pinpoint the precise insights you aim to uncover. This will steer your integration strategy and ensure alignment with your overarching business objectives.

2. Implementing Strong Data Governance and Security Frameworks

Establish clear data governance protocols and implement robust security measures to safeguard data privacy, ensure regulatory compliance, and maintain data integrity throughout the integration lifecycle.

3. Prioritizing Data Quality Management and Continuous Monitoring

Implement systematic data profiling, validation, and cleansing processes to guarantee data accuracy. Monitor your data pipelines and quality metrics to identify and address potential issues proactively.

4. Understanding Your Data Landscape Thoroughly

Before integrating any data, invest time in thoroughly understanding the structure, semantics, and relationships within each source system. Document data schemas, data dictionaries, and data flows. Identify potential data inconsistencies, overlaps, and gaps. This deep understanding will inform your integration design and transformation logic, minimizing errors and ensuring accurate data mapping.

5. Designing Scalable and Flexible Integration Architectures

Design scalable and flexible integration architectures to plan for future growth and changing business needs. Consider using modular designs and loosely coupled components to allow for easier addition of new data sources and modifications to existing pipelines without disrupting the entire system. Leverage Fabric's inherent scalability to handle increasing data volumes and processing demands.

6. Choosing the Right Integration Patterns and Tools

Select integration patterns (e.g., batch processing, real-time streaming, change data capture) and Fabric tools (e.g., Data Factory, Dataflows Gen2, Spark Notebooks) best suited for each integration scenario's specific data sources, data volumes, latency requirements, and transformation complexities. Avoid a one-size-fits-all approach.

7. Iterative Development and Testing

Adopt an iterative development approach, breaking down complex integration projects into smaller, manageable phases. Implement rigorous testing at each stage, including unit testing, integration testing, and user acceptance testing (UAT), to ensure your data pipelines' accuracy, reliability, and performance.

8. Comprehensive Documentation and Knowledge Sharing

Maintain detailed documentation of your integration processes, including data sources, data flows, transformation logic, and monitoring procedures. Foster knowledge sharing among your team to ensure continuity and facilitate troubleshooting and future enhancements.

Also Read: Azure Cloud Modernization Strategies for the Future

While the journey to unified data with Fabric is enriching, it's important to acknowledge potential challenges and prepare proactive solutions to overcome them.

Overcoming Obstacles: Challenges and Solutions in Data Integration

While the benefits of Fabric data integration are significant, be prepared to address potential challenges with proactive solutions:

1. Addressing Data Silos:

Challenge: Data residing in isolated systems with different formats, structures, and access mechanisms hinders a unified view.

Solutions: Leverage Fabric's diverse connectivity options to bridge these silos. Utilize the Lakehouse as a centralized repository to land and harmonize data from various sources. Employ data virtualization techniques where appropriate to access data without physically moving it while providing a unified logical view.

2. Solving Data Quality Issues:

Challenge: Inconsistent, inaccurate, or incomplete data can lead to flawed insights and poor decision-making.

Solutions: Implement robust data profiling to identify quality issues early. Utilize Fabric's data transformation tools (Data Factory, Dataflows Gen2, Databricks) to cleanse, standardize, and validate data. Implement data quality rules and checks within your pipelines. Establish data governance processes to prevent data quality issues at the source.

3. Automating Complex Data Transformations:

Challenge: Manual data transformations are time-consuming, error-prone, and difficult to scale.

Solutions: Leverage Azure Data Factory's visual interface and code-based options (Python, SQL) to design and automate complex transformation logic. Utilize Azure Databricks for advanced transformations requiring Spark processing and machine learning capabilities. Implement parameterized pipelines and reusable components to improve efficiency and maintainability.

4. Ensuring Security and Compliance:

Challenge: Integrating data from various sources can raise concerns about data privacy, security, and regulatory compliance.

Solutions: Leverage Microsoft Fabric's inherent security features, including encryption, access controls, and auditing. Implement data masking and tokenization techniques for sensitive data. Ensure compliance with relevant regulations by carefully managing data access and implementing appropriate data residency controls.

5. Managing Data Volume and Velocity:

Challenge: Processing large volumes of data and high-velocity real-time streams can strain traditional infrastructure.

Solutions: Leverage Fabric's scalable architecture, including Azure Data Lake Storage for massive data storage and Spark in Azure Databricks for parallel processing. Utilize Fabric's data streaming capabilities (Event Streams, KQL Database) for efficient real-time data ingestion and analysis.

6. Handling Schema Evolution and Data Model Changes:

Challenge: Source systems often undergo schema changes, which can break existing data pipelines.

Solutions: Design flexible data models and integration pipelines that can adapt to schema evolution. Implement schema-on-read approaches where feasible. Utilize data mapping and transformation tools that can handle schema variations. Implement robust error handling and monitoring to detect and manage schema changes proactively.

7. Performance Optimization:

Challenge: Inefficiently designed data pipelines can lead to slow processing times and increased costs.

Solutions: Optimize data partitioning strategies, leverage appropriate data formats (e.g., Parquet for analytical workloads), and tune query performance within Fabric. Monitor pipeline execution metrics and identify bottlenecks for optimization.

8. Collaboration and Skill Gaps:

Challenge: Data integration often requires collaboration across different teams with varying skill sets.

Solutions: Foster collaboration and communication between data engineers, data scientists, and business users. Provide training and upskilling opportunities to bridge skill gaps in Fabric and related technologies. Utilize Fabric's collaborative features for shared workspaces and development.

Also Read: Technology Insights and Trends for the Digital Age

Conclusion: Charting a Data-Driven Future with Microsoft Fabric

Data integration has evolved from a supporting function to a core strategic imperative for organizational success in the digital age. Microsoft Fabric provides a comprehensive, scalable, cost-effective platform to seamlessly connect, transform, analyze, and visualize your data, unlocking insights that fuel informed decisions, optimize operational efficiency, and deliver a critical competitive advantage.

By embracing Fabric and adhering to best practices for data integration, your organization can transcend the limitations of fragmented data and harness the true power of a unified information landscape. The future belongs to those who can effectively leverage their data, and Microsoft Fabric provides the essential tools to navigate that future successfully.

Ready to transform your data into a strategic asset and secure a competitive advantage?

At WaferWire, we specialize in providing businesses like yours with seamless implementation and optimization of Microsoft Fabric for powerful data integration.

Reach out to us today for a consultation and discover how WaferWire can empower your organization to unlock the full potential of your data with Microsoft Fabric!

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)