Managing Microsoft Fabric Capacity and Licensing Plans

Sai P

2025-06-02

Talk to our cloud experts

Subject tags

Microsoft's Finance Data and Experiences (FD&E) team reduced report processing times by two-thirds, delivering insights five hours faster and cutting data generation costs by 50% through the power of Microsoft Fabric.

Similarly, Revenue Grid achieved a 60% reduction in infrastructure costs by leveraging Microsoft Fabric's capabilities.

These examples show that Microsoft Fabric can be the powerhouse that drives your analytics, data science, and engineering all on one platform! But here's the catch—while the platform's capabilities are vast, choosing the right Licensing plan can be a maze of decisions that could either save or cost your business thousands.

This blog will guide you through the essential concepts, options, and best practices for managing Microsoft Fabric’s capacity and Licensing plans effectively.

What Is Capacity in Microsoft Fabric?

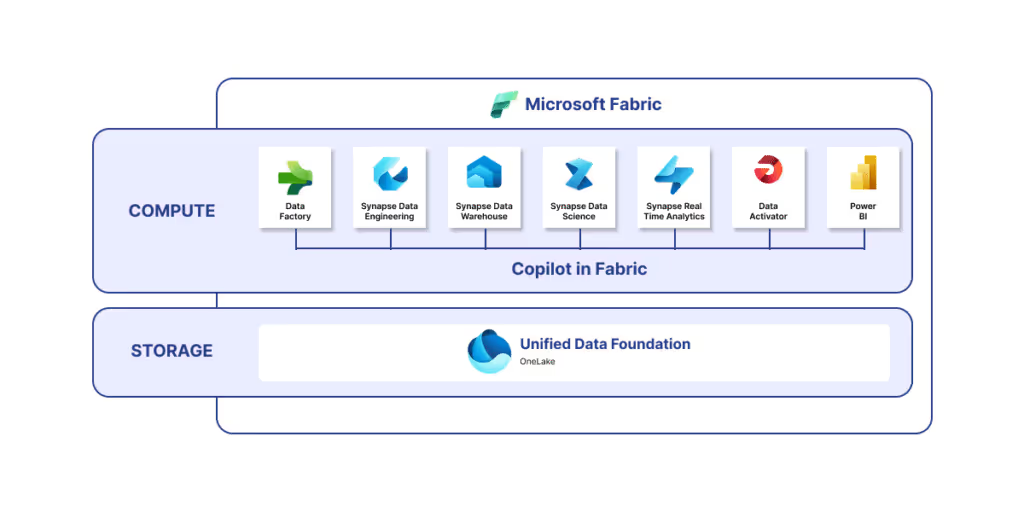

In Microsoft Fabric, capacity refers to the dedicated or shared compute resources that power your data workloads. These workloads include data ingestion pipelines, data transformation and integration tasks, real-time and batch analytics, AI and machine learning model training, as well as interactive Power BI reporting and dashboarding.

Fabric measures capacity in Capacity Units (CUs), which represent a bundle of CPU, memory, and I/O resources allocated to your environment. Different types and sizes of capacity provide different numbers of CUs and resource allocations.

In contrast to Azure, where each service has its own pricing, Microsoft Fabric simplifies the cost structure by focusing on two key components:

- Compute Capacity: One compute capacity supports all Fabric functionalities, allowing for seamless sharing across multiple projects and users. There’s no need to allocate separate capacities for different services like Data Factory or Synapse Data Warehousing, making it more efficient to manage.

- Storage: Storage costs are handled independently, but with a simplified approach, providing clearer choices and greater flexibility for users.

Now that we have established the importance of capacity, it’s time to look at how it’s organized within Microsoft Fabric. Let’s take a deeper look at each of these components and how they influence your Fabric environment.

Understanding Microsoft Fabric’s Capacity Structure

To effectively manage Microsoft Fabric’s pricing and Licensing, it's important to understand how the platform’s capacity is structured around Tenants, Capacities, and Workspaces. These components help organizations streamline their resources, optimize operational efficiency, and control costs.

- Tenant: A tenant is the highest organizational level within Microsoft Fabric. It is tied to a single Microsoft Entra ID and serves as the primary unit for managing access, permissions, and subscriptions. An organization may have multiple tenants to separate resources for different departments, projects, or business units.

- Capacity: Each tenant is associated with one or more capacities. These capacities are pools of compute and storage resources that drive the execution of workloads within Fabric. The greater the capacity, the more extensive and faster the workloads it can handle. Think of capacity as the engine power that determines the performance and scalability of your data tasks. More capacity allows for better processing speeds and the ability to support larger datasets.

- Workspace: Workspaces are the functional environments where data projects and workflows are executed. Each workspace is assigned a portion of the available capacity, and multiple workspaces can share resources from the same capacity. This structure allows teams and departments to work on separate projects without needing dedicated computing resources for each, providing flexibility and cost-efficiency.

Also read: Getting Started with Data Analytics on Microsoft Fabric

By understanding this structure, organizations can allocate resources based on their actual needs, ensuring that they optimize performance while managing costs effectively.

Once you understand the structure of Fabric, the next step is selecting the right Capacity SKU to support your data workflows. Next, we’ll break down these SKUs in more detail to help you determine which is best suited for your organization's needs.

Types of Capacity SKUs in Microsoft Fabric

Microsoft offers two main SKU families for capacity, designed to meet varying workload demands and use cases:

1. F SKUs (Azure SKUs)

- These SKUs are dedicated compute units provisioned solely for your organization’s workloads.

- Available in sizes ranging from F2 (small) to F2048 (very large), enabling scalability for workloads from development/test environments up to enterprise-scale production.

- Provide consistent, predictable performance since resources are not shared with other tenants.

- Ideal for organizations with high data volume, complex data engineering jobs, or intensive analytics and AI use cases.

- Each SKU scales in increments of cores, memory, and storage, allowing fine-tuned resource allocation.

2. P SKUs (Microsoft 365 SKUs)

- Originally designed for Power BI workloads, these SKUs (like P1, P2, P3) also support certain Fabric services.

- Best suited for organizations primarily focused on Power BI reporting and less intensive data engineering.

- Can be combined with F SKUs for organizations needing a mix of Power BI and Fabric services.

- Offer features like paginated reports, AI visualization, and larger dataset sizes.

When it comes to costs, Microsoft Fabric offers two primary pricing models: Pay-As-You-Go (PAYG) and Reserved Capacity. Each option has distinct advantages, depending on your workload and budget predictability. Now, let’s explore these pricing models in more depth, so you can make the best choice for your business.

Microsoft Fabric Licensing Plans: What Are Your Options?

Microsoft Fabric capacity is billed primarily under two pricing models, allowing organizations to choose based on usage predictability and cost preferences:

1. Pay-As-You-Go (PAYG)

- Charges accrue based on actual compute usage.

- Billed per second with a minimum billing duration of one minute.

- Ideal for variable workloads, development environments, or organizations preferring operational expense (OpEx) over capital expenditure.

- Offers the flexibility to spin up or scale down capacity instantly without long-term commitments.

- Example pricing: An F2 SKU costs roughly $0.36 per hour of usage. Larger SKUs scale proportionally.

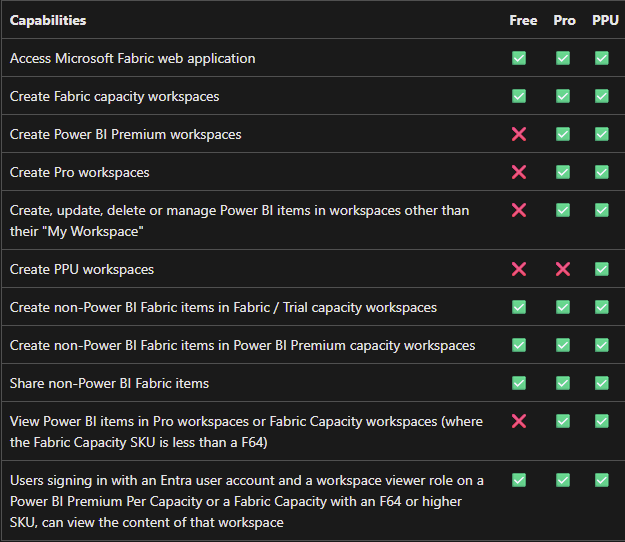

Per-User Licensing

- Free License: Allows users to access basic Fabric capabilities, excluding Power BI content sharing.

- Pro License: Required for users who create, share, or consume Power BI content within Fabric, providing access to premium features and higher capacity limits.

- Premium Per User (PPU): Offers advanced features such as larger dataset sizes, incremental refresh, and AI integration on a per-user basis.

Each Per-user licensing is tailored for specific capabilities as seen in the following table:

2. Reserved Capacity

- Organizations commit to a fixed capacity size for one or three years upfront.

- In return, Microsoft offers significant discounts over PAYG rates (often up to 40-50%).

- Best suited for predictable, steady-state workloads typical in production environments.

- Requires careful capacity planning to avoid underutilization or overcommitment.

- Reserved capacity contracts also provide billing and budget stability.

Now that we have explored the pricing models, let's take a closer look at how to optimize your capacity by considering these additional elements.

Capacity Considerations

- Scaling: Fabric supports dynamic scaling, allowing you to adjust capacity size as your workload demand changes. Some tiers enable automatic scaling during peak hours to handle bursts.

- Multi-Geo Availability: For global organizations, capacity can be provisioned in multiple Azure regions to reduce latency and meet data residency requirements.

- Storage Integration: Capacity pricing does not include data storage costs, which are billed separately depending on your storage choice (Azure Data Lake Gen2 or others).

- Concurrency: Higher capacity SKUs support more concurrent users and queries, essential for organizations with large teams and self-service analytics needs.

Suggested reading: Microsoft Synapse vs Fabric: Comprehensive Comparison

In the next section, we will break down storage-related expenses and show you how to better manage and optimize your storage usage within Microsoft Fabric.

Storage Costs in Microsoft Fabric

In Microsoft Fabric, compute capacity does not cover data storage expenses, meaning organizations must account for storage costs separately. These costs are similar to those associated with Azure Data Lake Storage (ADLS), as both are built on similar underlying technologies.

Key costs include:

- Data Storage: Storage is billed separately, based on the volume of data stored in OneLake, Fabric's unified data storage system.

- BCDR (Business Continuity and Disaster Recovery): Charges apply when data is retrieved from deleted workspaces, ensuring recovery of critical information.

- Cache Storage: Additional costs are incurred for cache storage, particularly for Kusto Query Language (KQL) databases, used to speed up queries.

- Bandwidth: Transferring data between regions incurs bandwidth charges, which are calculated based on the volume of data transferred.

This breakdown helps organizations better estimate their total storage expenses and avoid unexpected costs.

With capacity and storage under control, it's time to address the core of the matter: best practices.

Choosing the right plan is only the beginning. To maximize the value of your Microsoft Fabric environment, you will need to follow best practices for managing your capacity.

Best Practices for Managing Microsoft Fabric Capacity

Effectively managing Microsoft Fabric’s capacity ensures optimal performance, cost-efficiency, and seamless scaling as your organization’s data needs grow. Below are key best practices to ensure you are leveraging your resources to the fullest while maintaining control over costs.

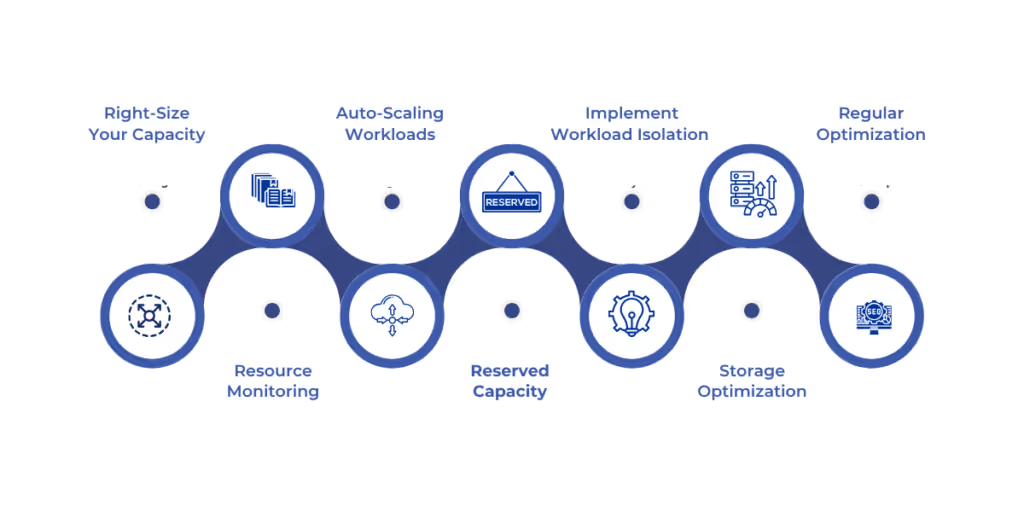

1. Right-Size Your Capacity

One of the most important aspects of managing capacity is selecting the right SKU for your workloads. Overestimating your needs can lead to unnecessary costs, while underestimating may result in performance bottlenecks.

Best Practice:

- Start small and monitor usage. Scale up as needed based on performance metrics.

- Use the Fabric Capacity Estimator to accurately forecast your capacity needs based on historical data and expected growth.

2. Monitor and Track Resource Usage

Continuous monitoring of resource consumption allows you to identify inefficiencies and optimize your usage. Use Microsoft Fabric’s built-in monitoring tools to track key metrics like CPU usage, memory, and storage consumption.

Best Practice:

- Set up custom alerts to notify you when resource usage is approaching predefined thresholds.

- Leverage Azure Cost Management and Microsoft Fabric’s usage analytics to keep an eye on utilization trends.

3. Use Auto-Scaling for Dynamic Workloads

Auto-scaling ensures that Fabric automatically adjusts capacity in response to fluctuating workloads. This is particularly useful for businesses with variable or unpredictable usage patterns, such as seasonal peaks or project-specific spikes.

Best Practice:

- Enable auto-scaling for compute resources in Fabric to accommodate workload spikes without manual intervention.

- Combine auto-scaling with bursting for sudden, high-intensity tasks, ensuring workloads are completed quickly without over-provisioning.

4. Leverage Reserved Capacity for Predictable Workloads

For organizations with predictable, steady-state workloads, reserved capacity offers substantial cost savings. By committing to a one- or three-year term, you can lock in lower rates and optimize your long-term resource planning.

Best Practice:

- If you have ongoing, high-demand workloads (e.g., large data integrations or reporting), consider reserving capacity to take advantage of discounted rates.

- Align your reserved capacity purchase with your growth projections to avoid paying for unused resources.

5. Implement Workload Isolation

Workload isolation involves separating different types of workloads (e.g., data engineering, analytics, reporting) into dedicated workspaces or even dedicated capacities. This ensures that critical workloads, such as real-time analytics or mission-critical AI models, do not experience slowdowns due to resource contention.

Best Practice:

- Use dedicated capacities for mission-critical workloads that require consistent performance.

- Implement workspaces to isolate different teams or projects, giving them the ability to scale independently without affecting each other.

6. Optimize Storage and Data Management

Microsoft Fabric relies on OneLake for storage, but managing your data and how it's stored can significantly impact performance and costs. Optimizing your storage strategy, including data partitioning and the use of incremental refresh, helps improve both performance and cost-efficiency.

Best Practice:

- Use data partitioning for large datasets to improve processing times and reduce the amount of data that needs to be read at once.

- Enable incremental data refresh to update only the changes in your datasets, avoiding full reloads and reducing resource consumption.

7. Review and Adjust Regularly

As your organization grows and your data needs evolve, it’s important to periodically review your capacity and Licensing. This includes assessing whether your current capacity is still suitable, and whether new capabilities or configurations could offer better performance or cost efficiency.

Best Practice:

- Conduct regular capacity reviews every 3-6 months to ensure that your configurations align with your current workload requirements.

- Adjust your Licensing and capacity as needed to accommodate business growth, seasonal fluctuations, or new data projects.

Conclusion

Microsoft Fabric provides organizations with flexible, scalable, and efficient data management solutions. By understanding the different capacity-based and per-user Licensing models, organizations can tailor their Licensing strategy to meet their specific needs. Whether you choose the Pay-As-You-Go model for dynamic workloads or reserved capacity for long-term savings, Microsoft Fabric offers the tools necessary to optimize performance and cost-efficiency.

At WaferWire, we don’t just help you integrate Microsoft Fabric, we tailor it to amplify your business’s unique potential. With our expertise in data engineering, analytics, and AI, we ensure that you don’t just use Fabric, but fully leverage it to streamline operations, cut costs, and drive smarter decisions.

Don’t let complexity hold you back. Partner with WaferWire to unlock seamless scalability, smarter workflows, and a data infrastructure that works for you.

Reach out to us today — let’s make Microsoft Fabric work as hard as you do.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)