Understanding Microsoft Fabric Lakehouse Architecture

Mitra P

2025-04-21

Talk to our cloud experts

Subject tags

Businesses are reconsidering how they store, process, and analyze information as the volume and complexity of enterprise data continue to increase.

The emergence of unified analytics architectures like the Microsoft Fabric lakehouse marks a significant shift in modern data infrastructure—blending the best elements of data lakes and data warehouses into a single, scalable solution.

Microsoft Fabric’s lakehouse architecture introduces a powerful, cloud-native approach that eliminates silos, promotes real-time analytics, and empowers cross-functional teams with a unified data foundation.

This blog explores the Microsoft Fabric lakehouse model in depth—unpacking its key components, ingestion, and processing mechanisms, security capabilities, and real-world use cases. Whether you're evaluating Fabric for enterprise deployment or seeking to modernize legacy systems, this guide offers actionable insights to inform your strategy.

Microsoft Fabric Lakehouse Overview

At its core, the fabric lakehouse is Microsoft’s answer to the growing demand for unified analytics. It merges the scalability of data lakes with the reliability and structured querying of data warehouses.

Definition and Purpose

Unlike traditional architectures that require data to be copied or transformed across systems, a lakehouse enables direct querying and analytics on raw and processed data within the same environment. Fabric’s implementation builds this over OneLake, its unified storage layer, and enhances it with AI-powered services and familiar tooling such as Power BI and Spark.

Key Features

- Scalable File Storage: Built on OneLake, it supports massive volumes of unstructured, semi-structured, and structured data.

- ACID Compliance via Delta Lake: Fabric uses the Delta Lake format to ensure transactional consistency, making it suitable for production-grade analytics workloads.

- Integrated Toolset: Seamless compatibility with Microsoft tools like Power BI, Synapse, and Data Factory for end-to-end data engineering.

This architecture enables seamless integration between data lakes and warehouses, fostering both operational and analytical workloads in a unified platform.

For organizations considering the adoption of Microsoft Fabric’s lakehouse architecture, it is essential to understand how these features combine to create a versatile and powerful data management platform.

At WaferWire, we help organizations harness the full potential of the lakehouse architecture, ensuring smooth integration, data governance, and performance optimization for their unique needs.

Key Components of the Lakehouse Architecture

The architecture of the Microsoft Fabric lakehouse includes several key components that work together to facilitate data management, analytics, and processing. These include:

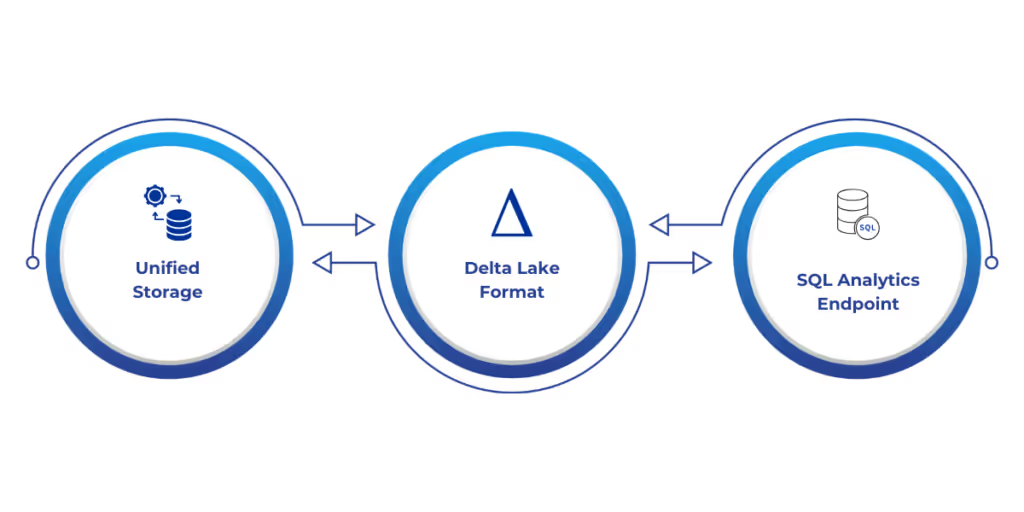

1. OneLake – Unified Data Storage

OneLake serves as the central storage foundation for the fabric lakehouse, offering a single, logical storage layer for all your data workloads—structured or otherwise. Unlike isolated storage accounts in traditional cloud environments, OneLake provides a globally accessible namespace with built-in data governance, discoverability, and collaboration features.

2. Delta Lake Format

All lakehouse tables in Fabric are stored using the open-source Delta Lake format, enabling support for:

- ACID transactions

- Time travel (versioned data)

- Schema enforcement and evolution

This significantly reduces the friction associated with managing and querying big data.

3. SQL Analytics Endpoint

Every lakehouse created in Fabric exposes a SQL analytics endpoint, allowing teams to interact with their data using familiar T-SQL syntax. This boosts adoption among business users and developers alike while enabling high-performance analytics without moving data to external engines.

These components ensure that the lakehouse architecture is not just scalable but also flexible, capable of handling complex data operations from ingestion to real-time analytics.

As businesses increasingly look for unified solutions, WaferWire Cloud Technologies plays a key role in helping organizations implement and optimize these components. We guide clients through best practices for setting up and utilizing the lakehouse architecture to achieve high performance and scalability.

Data Ingestion and Storage

Data ingestion into the fabric lakehouse is flexible and supports multiple pipelines and interfaces. Here’s how data typically flows into the system:

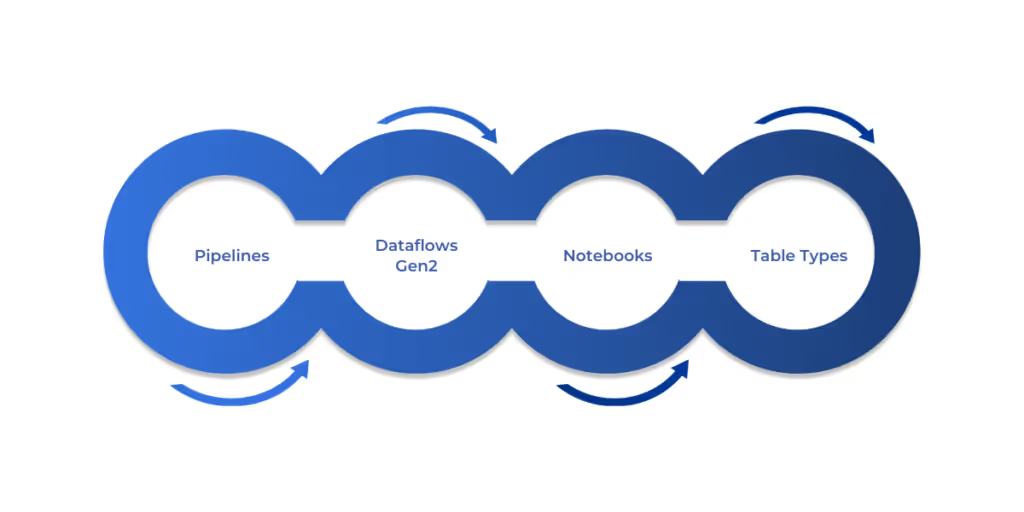

1. Pipelines

Microsoft Fabric’s Data Factory-style pipelines allow users to connect to various sources (on-premises, cloud, SaaS) and ingest data with scheduled workflows.

2. Dataflows Gen2

Fabric also supports Dataflow Gen2, an advanced low-code tool for transforming and ingesting data directly into lakehouses. It leverages Power Query and integrates seamlessly with OneLake.

3. Notebooks

For more technical users, Spark Notebooks allow programmatic ingestion using Python or Scala. This is especially useful for streaming, real-time events, or machine learning pipelines.

4. Managed and Unmanaged Tables

- Managed tables are handled entirely by Fabric, including metadata and storage location.

- Unmanaged tables allow external systems to reference and query data stored in OneLake, adding flexibility in hybrid data scenarios.

5. Shortcuts

One of the most innovative features of Microsoft Fabric is Shortcuts, which allow users to create logical links to external data sources—without moving the data. Whether it’s from Azure Data Lake Storage Gen2, Amazon S3, or even another OneLake location, you can reference and use this data in your lakehouse as if it were natively stored there. This reduces redundancy, saves costs, and ensures data consistency across domains.

6. Mirroring (Preview)

The Mirroring feature allows near-real-time syncing of external databases into Fabric—such as Azure Cosmos DB or SQL-based systems—so changes made in the source system are automatically reflected in Fabric. It enables low-latency analytics on operational data and reduces the need for complex replication setups.

With such flexible ingestion capabilities, Microsoft Fabric’s lakehouse becomes not just a storage solution but the beating heart of your analytics strategy. Whether you prefer visual tools, script-driven pipelines, or zero-copy access via shortcuts, Fabric adapts to your needs while scaling with your data.

When integrating and optimizing data ingestion processes, WaferWire Cloud Technologies provides expert guidance to ensure organizations leverage these capabilities effectively. Our team assists in designing streamlined pipelines, ensuring scalable data ingestion, and implementing governance practices across platforms.

Processing and Transformation

Data transformation is one of the key capabilities of the Microsoft Fabric lakehouse. The lakehouse supports various transformation techniques, including:

1. Notebooks and Spark SQL

Notebooks provide a rich environment for coding, running, and visualizing data transformations, supporting both Spark SQL and Python-based operations for complex transformations.

2. Real-Time and Streaming Data

The lakehouse architecture also supports real-time streaming, enabling organizations to process event-based data and perform immediate analytics, which is essential for use cases like IoT and customer behavior analysis.

3. Medallion Architecture

Fabric encourages the Bronze → Silver → Gold layering of data:

- Bronze: Raw data

- Silver: Cleansed and conformed data

- Gold: Business-ready datasets for reporting and AI models

This approach allows organizations to perform sophisticated data transformations while maintaining data integrity and performance. It also provides a clear path from raw data to business-ready insights.

At WaferWire, we ensure that organizations not only implement these transformation techniques but also optimize them for performance, scalability, and governance. Our team works to ensure smooth transitions between transformation stages and helps clients leverage the Medallion architecture for efficient data management.

Data Consumption and Visualization

Once data has been ingested and transformed, it is ready for consumption. Microsoft Fabric lakehouse offers various tools for data exploration and visualization:

1. DirectLake for Power BI

One of Fabric’s standout features is DirectLake. It allows Power BI to directly query Delta Lake tables in OneLake—bypassing traditional import models. This leads to faster, real-time reporting.

2. Power Query Integration

Users can manipulate data visually through Power Query, simplifying transformation for business analysts.

3. Custom Dashboards and Reports

With DirectLake and semantic models, teams can build rich dashboards without duplicating data. This drastically reduces maintenance and improves accuracy.

These capabilities allow organizations to derive valuable insights from their data with ease. Microsoft Fabric’s integration with Power BI empowers business users to make data-driven decisions without relying on IT teams for every request.

WaferWire works closely with businesses to implement best practices for creating interactive reports and dashboards. We help streamline the connection between Microsoft Fabric and Power BI, ensuring that organizations can leverage the full potential of their data for actionable insights.

Security and Governance

Ensuring data security and governance is paramount, especially when managing sensitive or regulated data. Microsoft Fabric’s lakehouse architecture includes several features to ensure that data is secure, consistent, and accessible only to authorized users:

1. Access Control

Fabric supports granular permission settings for both data access and administrative roles.

2. Data Masking and Encryption

Built-in dynamic data masking, row-level security, and end-to-end encryption ensure sensitive data is protected.

3. Governance

Fabric integrates with Microsoft Purview for unified data governance and auditing. WaferWire’s consultants help companies establish enterprise-grade policies and controls to ensure compliance with regulations like GDPR and HIPAA.

These features help organizations meet security and compliance requirements while maintaining efficient data access and management.

At WaferWire, we help clients implement security and governance measures within the Microsoft Fabric ecosystem. Our team assists in designing access control strategies and ensuring compliance across various platforms.

Use Cases and Flexibility

The fabric lakehouse model is adaptable across industries, offering support for:

- Retail: Customer segmentation and inventory analytics

- Finance: Fraud detection and risk modeling

- Healthcare: Clinical data processing and predictive insights

- Manufacturing: IoT telemetry and quality monitoring

Its ability to handle structured, semi-structured, and unstructured data also makes it ideal for AI, machine learning, and advanced analytics projects.

Conclusion

Microsoft Fabric lakehouse architecture is an innovative and scalable solution that combines the best of data lakes and data warehouses. With its unified storage, robust transformation capabilities, and seamless integration with Power BI, it provides a comprehensive platform for managing large datasets and performing advanced analytics. By adopting this architecture, organizations can improve efficiency, enhance data governance, and unlock powerful insights from their data.

As businesses continue to embrace data-driven decision-making, the lakehouse architecture will play a critical role in how they manage and analyze data. For organizations looking to implement Microsoft Fabric lakehouse, WaferWire Cloud Technologies is here to help. Our team offers tailored solutions, ensuring smooth integration, optimal performance, and robust governance across all your data initiatives.

Ready to explore how Microsoft Fabric can transform your data strategy?

Contact WaferWire today to learn more about how our expertise can help you make the most of this cutting-edge architecture.

FAQs

1. What is the main difference between a data lake and a Lakehouse?

A data lake is designed to store vast amounts of raw, unstructured data, while a Lakehouse combines the best features of data lakes and data warehouses. A Lakehouse provides structured storage with the flexibility of a data lake and the transactional capabilities of a data warehouse, ensuring data consistency, scalability, and improved performance.

2. Can Microsoft Fabric Lakehouse handle both batch and real-time data processing?

Yes, Microsoft Fabric Lakehouse architecture supports both batch and real-time data processing. It uses advanced features like Spark SQL and streaming capabilities to enable real-time analytics, which makes it ideal for handling time-sensitive data from sources like IoT devices and customer interactions.

3. How does Delta Lake enhance data management in Microsoft Fabric?

Delta Lake provides ACID transactions, schema enforcement, and version control. These features ensure data integrity, allow time travel for historical data access, and provide high-quality, consistent data that can be processed both in batch and real-time scenarios. It enhances the ability to manage structured and unstructured data in a Lakehouse environment.

4. What is OneLake, and how does it integrate into the Lakehouse architecture?

OneLake is the unified storage solution used in Microsoft Fabric. It consolidates data storage across various workloads and integrates seamlessly with the Lakehouse architecture to simplify data management. OneLake supports the storage of all data types, making it easy to scale and manage data across different stages of transformation and analysis.

5. How does the Medallion architecture benefit data processing in a Lakehouse?

The Medallion architecture (Bronze, Silver, and Gold layers) provides a structured approach to data processing. The Bronze layer stores raw data, the Silver layer contains cleaned and transformed data, and the Gold layer holds curated, business-ready data. This approach ensures that data processing is organized, traceable, and efficient, helping businesses maintain high-quality datasets at every stage.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)