Setting Up and Using dbt with Microsoft Fabric

Sai P

2025-07-16

Talk to our cloud experts

Subject tags

Businesses constantly seek efficient tools to process, model, and analyze their data. Microsoft Fabric, with its unified platform for data storage, processing, and analytics, has become the go-to solution. dbt (Data Build Tool), as a robust data transformation tool used extensively in data engineering processes, is one such tool that boosts Microsoft Fabric's potential.

Combining dbt and Microsoft Fabric has a lot to offer in terms of simplifying your data transformations, automating processes, and facilitating self-service analytics. In this blog, we will walk you through integrating dbt with Microsoft Fabric so you can take full advantage of its capabilities for modeling and analytics.

Key Takeaways– Setting Up and Using dbt with Microsoft Fabric

- dbt Enhances Microsoft Fabric: dbt (Data Build Tool) streamlines data transformation within Microsoft Fabric, enabling SQL-based modeling, automation, and clean analytics-ready datasets.

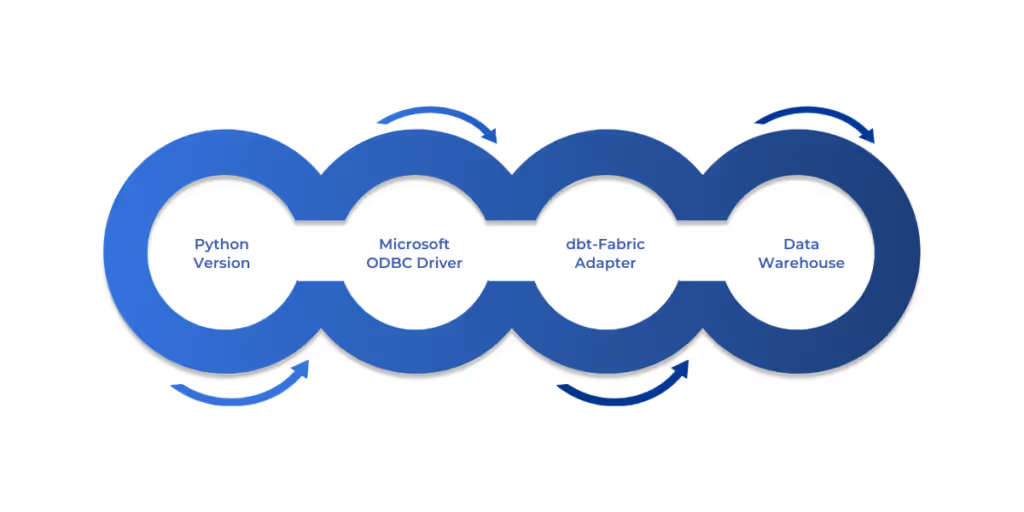

- Prerequisites Include: Python 3.7+, Microsoft ODBC Driver for SQL Server, the dbt-fabric adapter, and a Fabric Data Warehouse are required for integration.

- Setup Steps: Configure profiles.yml and dbt_project.yml, clone a sample project (like jaffle_shop), and run dbt run and dbt test to verify setup.

- Airflow Integration: Use Apache Airflow to automate and schedule dbt workflows via DAGs, improving pipeline reliability and reducing manual work.

Prerequisites for setting up dbt

Before diving into setting up dbt with Microsoft Fabric, there are a few prerequisites you need to ensure are in place. These prerequisites are essential for smooth integration and operation of dbt with the platform.

- Python Version: Ensure that you have Python version 3.7 or higher installed. dbt relies on Python to run, and using an incompatible version could lead to issues.

- Microsoft ODBC Driver: To connect your data warehouse with dbt, you need to install the Microsoft ODBC Driver for SQL Server. This allows dbt to communicate with your Microsoft Fabric Data Warehouse.

- dbt-Fabric Adapter: You will also need to install the dbt-fabric adapter via pip. This adapter is specifically designed to integrate dbt with Microsoft Fabric’s data warehouse, enabling smooth data transformation workflows.

- Microsoft Fabric Data Warehouse: Finally, you will need a Microsoft Fabric Data Warehouse. This is the data storage solution you will use to store your data models, and run the dbt transformations.

Once you have those prerequisites in place, you are ready to set up dbt with Microsoft Fabric. Now let's move on to the next step.

Visit Microsoft’s official documentation for more information.

Setting Up dbt with Microsoft Fabric

Now that the foundation has been laid out, let's take a look at setting up dbt with Microsoft Fabric. This consists of several key areas for integration and configuration.

- Cloning a Sample dbt Project: First, you can clone a sample dbt project, such as the popular jaffle_shop. This project provides a good starting point and allows you to familiarize yourself with how dbt works in a live environment. Clone this project into your development environment so you can experiment with its configuration and use cases.

- Configuring the profiles.yml File: The profiles.yml file contains connection details that allow dbt to connect to your data warehouse. In this step, you’ll configure the profiles.yml with the necessary credentials, server details, and schema information. Ensure that your connection information matches the setup of your Microsoft Fabric Data Warehouse.

- Setting Up the dbt_project.yml File: The dbt_project.yml file tells dbt how to configure the data warehouse configuration, such as models, materializations, and paths. Ensure that you set this file up with the paths relevant to your data models and define the configurations specific to your project requirements.

- Running Initial dbt Commands: Once you have configured, you can execute initial dbt commands like dbt run to run transformations and dbt test to check that everything is in working condition. These are the initial steps towards ensuring that your environment is working fine.

With dbt in place, it's now time to integrate dbt with Apache Airflow for orchestration.

Integrating dbt with Apache Airflow in Microsoft Fabric

A key part of using dbt in production environments is orchestrating and automating the workflow. This is where Apache Airflow comes in, helping you automate the running of dbt tasks and manage dependencies across your data pipeline.

- Overview of Apache Airflow: Apache Airflow is an open-source tool for orchestrating complex data workflows. It allows you to define, schedule, and monitor workflows as Directed Acyclic Graphs (DAGs), making it a perfect companion for dbt in Microsoft Fabric.

- Creating a dbt Project within an Apache Airflow Job: To use dbt with Airflow, you first create a dbt project as part of an Airflow DAG. This enables you to schedule and execute dbt commands automatically based on predefined schedules. You can include dbt operations like dbt run or dbt test as tasks within your Airflow workflow.

- Configuring the Airflow Directed Acyclic Graph (DAG): You will need to create a DAG for Airflow, this model defines the order in which the dbt tasks are run. Airflow will manage when dbt jobs run and ensure they are run in the specified order.

- Running and Monitoring the DAG: Once you set up the DAG, you can execute it within Airflow. The interface will allow you to follow the execution of each dbt task, check logs, and troubleshoot errors if and when they arise.

With dbt running smoothly within your data pipeline and integrated with Airflow, the next step is to follow best practices for managing dbt projects in Microsoft Fabric.

Best Practices for Using dbt in Microsoft Fabric

When using dbt with Microsoft Fabric, it’s essential to follow best practices to ensure efficient workflows, maintainability, and collaboration within your teams. Here are some key strategies for optimal dbt usage:

- Organizing dbt Models and Directories: Organize your dbt project with proper, consistent naming schemes for models, directories, and files. This will make it easier to manage your project, particularly as it gets larger.

- Implementing Version Control: Use version control using Git to version your dbt project. This allows team members to collaborate and have changes tracked and easily reversible if needed.

- Utilizing dbt's Testing and Documentation Features: dbt comes with native testing for your data transformations, so you can find issues before they become problems. Furthermore, dbt generates automated documentation of your models to enable you and your team to comprehend the logic and dependency of the transformations.

- Setting Up Automated Deployments and Schedules: Automate your dbt project deployment using Airflow or other orchestration tools. This ensures that your transformations run on schedule, minimizing manual intervention and ensuring consistency in your data pipelines.

Also Read: Implementing Data Fabric for Hybrid Cloud

With these best practices in mind, let's move forward and explore how to troubleshoot common issues that may arise during dbt setup and use.

Troubleshooting Common Issues

Although dbt is highly capable, you can still find some problems along the way. Here are some common problems and feasible solutions to keep your setup intact:

- Connectivity Issues Between dbt and Microsoft Fabric: If dbt cannot connect to your Microsoft Fabric Data Warehouse, you should double-check your profiles.yml setup. Make sure that the right credentials, host information, and database names are entered.

- Errors in profiles.yml Configuration: If you are experiencing errors while running dbt, ensure that the connection information in profiles.yml is accurate and according to your warehouse setup. Misconfigurations like incorrect database URLs or authentication protocols are typical causes of problems.

- Handling dbt Run Failures: In case of a failure in your dbt run, check the logs for error messages. Most likely, problems will be due to improperly formed SQL queries, missing tables, or unfinished configurations. dbt's comprehensive error logs will facilitate identifying the root of the problem.

- Compatibility Between dbt Versions and Dependencies: Verify that your dbt version is in sync with the rest of your environment dependencies. Incompatible versions of dbt or Python can cause cryptic errors, so keep your dependencies updated at all times.

With troubleshooting behind us, let’s discuss best practices for schema management in dbt to make sure you get the most out of your setup.

Conclusion

Integrating dbt with Microsoft Fabric is a powerful combination that can significantly enhance your data transformation processes. From easy setup to seamless integration with Power BI and Apache Airflow, dbt makes it easier to manage and analyze data efficiently.

However, implementing dbt in your organization is just the beginning. To maximize its potential, follow best practices, troubleshoot issues as they arise, and continually optimize your workflows.

At WaferWire, our Microsoft and AI specialists can assist you throughout each process of implementing dbt with Microsoft Fabric, from installation to high-level customization. Whether you require assistance in setting up your environment, streamlining your workflows, or integrating Power BI, we're here to support you. Contact WaferWire today to elevate your data transformation procedures to the next level.

FAQs

Q1. Why integrate dbt with Microsoft Fabric for data transformations?

dbt lets you use SQL to transform raw data into analytics-ready models, streamlining your pipeline within Fabric’s data warehouse. This ensures clean, reliable data for reports, like summarizing sales for business insights.

Q2. What components do you need to use dbt with Fabric?

You need Python 3.7+, the Microsoft ODBC Driver for SQL Server, the dbt-fabric adapter, and a Fabric Data Warehouse. These ensure dbt connects seamlessly to transform data in your Fabric environment.

Q3. How does Airflow automation benefit dbt in Fabric?

Airflow automates dbt tasks via DAGs, scheduling dbt run and dbt test jobs. For example, you can automate daily updates to a customer model, with Fabric’s Airflow UI tracking execution for reliability.

Q4. How can you resolve dbt connectivity issues in Fabric?

Check profiles.yml for correct server and authentication details. Verify the ODBC driver installation. Run dbt debug to test connectivity, and ensure Azure AD credentials are valid to fix login errors.

Q5. What’s the value of dbt’s testing in Fabric projects?

dbt’s tests ensure data quality by checking for nulls or duplicates. For example, testing a sales table for missing IDs prevents errors in Fabric reports, keeping your analytics accurate and trustworthy.

Subscribe to Our Newsletter

Get instant updates in your email without missing any news

Copyright © 2025 WaferWire Cloud Technologies

.png)